I visited a small native grocery retailer which occurs to be in a touristy a part of my neighborhood. In the event you’ve ever traveled overseas, you then’ve in all probability visited a retailer like that to fill up on bottled water with out buying the overpriced lodge equal. This was considered one of these shops.

To my misfortune, my go to occurred to coincide with a gaggle of vacationers arriving abruptly to purchase drinks and heat up (it’s winter!).

It simply so occurs that deciding on drinks is commonly a lot quicker than shopping for fruit — the rationale for my go to. So after I had chosen some scrumptious apples and grapes, I ended up ready in line behind 10 individuals. And there was a single cashier to serve us all. The vacationers didn’t appear to thoughts the wait (they have been all chatting in line), however I positive want that the shop had extra cashiers so I might get on with my day quicker.

What Does This Must Do With System Efficiency?

You’ve in all probability skilled an identical state of affairs your self and have your personal story to inform. It occurs so continuously that generally we overlook how relevant these conditions may be to different area areas, together with distributed techniques. Typically whenever you consider a brand new answer, the outcomes don’t meet your expectations. Why is latency excessive? Why is the throughput so low? These are two of the highest questions that pop up once in a while.

Many occasions, the challenges may be resolved by optimizing your efficiency testing method, in addition to higher maximizing your answer’s potential. As you’ll understand, enhancing the efficiency of a distributed system is quite a bit like making certain speedy checkouts in a grocery retailer.

This weblog covers 7 performance-focused steps so that you can comply with as you consider distributed techniques efficiency.

Step 1: Measure Time

With groceries, step one in direction of doing any critical efficiency optimization is to exactly measure how lengthy it takes for a single cashier to scan a barcode. Some items, like bulk fruits that require weighing, might take longer to scan than merchandise in industrial packaging.

A typical false impression is that processing occurs in parallel. It doesn’t (be aware: we’re not referring to capabilities like SIMD and pipelining right here). Cashiers don’t service greater than a single individual at a time, nor do they scan your merchandise’ barcodes concurrently. Likewise, a single CPU in a system will course of one work unit at a time, regardless of what number of requests are despatched to it.

In a distributed system, contemplate all of the totally different work items you might have and execute them in an remoted approach in opposition to a single shard. Execute your totally different gadgets with single-threaded execution and measure what number of requests per second the system can course of.

Ultimately, chances are you’ll be taught that totally different requests get processed at totally different charges. For instance, if the system is ready to course of a thousand 1 KB requests/sec, the common latency is 1 ms. Equally, if throughput is 500 requests/sec for a bigger payload dimension, then the common latency is 2 ms.

Step 2: Discover the Saturation Level

A cashier isn’t scanning barcodes on a regular basis. Typically, they are going to be idle ready for patrons to put their gadgets onto the checkout counter, or ready for cost to finish. This introduces delays you’ll sometimes wish to keep away from.

Likewise, each request your shopper submits in opposition to a system incurs, for instance, community spherical journey time — and you’ll all the time pay a penalty underneath low concurrency. To remove this idleness and additional enhance throughput, merely enhance the concurrency. Do it in small increments till you observe that the throughput saturates and the latency begins to develop.

When you attain that time, congratulations! You successfully reached the system’s limits. In different phrases, except you handle to get your work gadgets processed quicker (for instance, by lowering the payload dimension) or tune the system to work extra effectively along with your workload, you received’t obtain positive aspects previous that time.

You undoubtedly don’t wish to end up in a state of affairs the place you’re consistently pushing the system in opposition to its limits, although. When you attain the saturation space, fall again to decrease concurrency numbers to account for progress and unpredictability.

Step 3: Add Extra Employees

In the event you stay in a busy space, grocery retailer demand is likely to be past what a single cashier can maintain. Even when the shop occurred to rent the quickest cashier on the earth, they’d nonetheless be busy as demand/concurrency will increase.

As soon as the saturation level is reached it’s time to rent extra employees. Within the distributed techniques case, this implies including extra shards to the system to scale throughput underneath the latency you’ve beforehand measured. This leads us to the next system:

Variety of Employees = Goal Throughput/Single employee restrict

You already found the efficiency limits of a single employee within the earlier train. To search out the overall variety of employees you want, merely divide your goal throughput by how a lot a single employee can maintain underneath your outlined latency necessities.

Distributed techniques like ScyllaDB present linear scale, which simplifies the maths (and complete price of possession [TCO]). The truth is, as you add extra employees, chances are high that you just’ll obtain even larger charges than underneath a single employee. The reason being as a consequence of Community IRQs, and out of scope for this write-up (however see this perftune docs page for some particulars).

Step 4: Enhance Parallelism

Give it some thought. The entire time to take a look at an order is pushed by the variety of gadgets in a cart divided by the velocity of a single cashier. As a substitute of including all of the strain on a single cashier, wouldn’t it’s way more environment friendly to divide the gadgets in your buying cart (our work) and distribute them amongst associates who might then try in parallel?

Typically the variety of work gadgets it is advisable to course of won’t be evenly cut up throughout all obtainable cashiers. For instance, if in case you have 100 gadgets to take a look at, however there are solely 5 cashiers, you then would route 20 gadgets per counter.

You may marvel: “Why shouldn’t I as a substitute route solely 5 clients with 20 gadgets every?” That’s an excellent query — and also you in all probability ought to try this, quite than having the shop’s safety kick you out.

When designing real-time low-latency OLTP techniques, nonetheless, you principally care in regards to the time it takes for a single work unit to get processed. Though it’s doable to “batch” a number of requests in opposition to a single shard, it’s far tougher (although not unimaginable) to constantly accomplish that activity in such a approach that each merchandise is owned by that particular employee.

The answer is to all the time make sure you dispatch particular person requests one by one. Maintain concurrency excessive sufficient to beat exterior delays like shopper processing time and community RTT, and introduce extra shoppers for larger parallelism.

Step 5: Keep away from Hotspots

Even after a number of cashiers get employed, it generally occurs {that a} lengthy line of consumers queue after a handful of them. Most of the time it is best to be capable of discover much less busy — and even completely free — cashiers just by strolling by means of the hallway.

This is called a hotspot, and it typically will get triggered as a consequence of unbound concurrency. It manifests in a number of methods. A typical state of affairs is when you might have a site visitors spike to a couple common gadgets (load). That momentarily causes a single employee to queue a substantial quantity of requests. One other instance: low cardinality (uneven information distribution) prevents you from totally benefiting from the elevated workforce.

There’s additionally one other generally ignored state of affairs that continuously arises. It’s whenever you dispatch an excessive amount of work in opposition to a single employee to coordinate, and that single employee depends upon different employees to finish that activity. Let’s get again to the buying analogy:

Assume you’ve discovered your self on a blessed day as you method the checkout counters. All cashiers are idle and you may select any of them. After most of your gadgets get scanned, you say, “Pricey Mrs. Cashier, I need a type of whiskies sitting in your locked closet.” The cashier then calls for an additional worker to select up your order. A couple of minutes later, you understand: “Oops, I forgot to select up my toothpaste,” and one other idling cashier properly goes and picks it up for you.

This method introduces a couple of issues. First, your cost must be aggregated by a single cashier — the one you bumped into whenever you approached the checkout counter. Second, though we parallelized, the “major” cashier will likely be idle ready for his or her completion, including delays. Third, additional delays could also be launched between every extra and particular person request completion: for instance, when the keys of the locked closet are solely held by a single worker, the overall latency will likely be pushed by the slowest response.

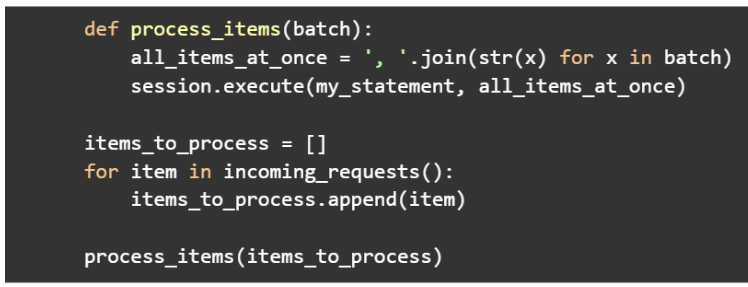

Contemplate the next pseudocode:

See that? Don’t try this. The earlier sample works properly when there’s a single work unit (or shard) to route requests to. Key-value caches are an excellent instance of how a number of requests can get pipelined altogether for larger effectivity. As we introduce sharding into the image, this turns into an effective way to undermine your latencies given the beforehand outlined causes.

Step 6: Restrict Concurrency

When extra shoppers are launched, it’s like clients inadvertently ending up on the grocery store throughout rush hour. Immediately, they will simply find yourself in a state of affairs the place many consumers all determine to queue underneath a handful of cashiers.

You beforehand found the utmost concurrency at which a single shard can service requests. These are onerous numbers and — as you noticed throughout small-scale testing — you received’t see any advantages when you attempt to push requests additional. The system goes like this:

Concurrency = Throughput * Latency

If a single shard sustains as much as 5K ops/second underneath a median latency of 1 ms, then you possibly can execute as much as 5 concurrent in-flight requests always.

Later you added extra shards to scale that throughput. Say you scaled to twenty shards for a complete throughput purpose of 100K ops/second. Intuitively, you’d suppose that your most helpful concurrency would turn into 100. However there’s an issue.

Introducing extra shards to a distributed system doesn’t enhance the utmost concurrency {that a} single shard can deal with. To proceed the buying analogy, a single cashier will proceed to scan barcodes at a hard and fast charge — and if a number of clients line up ready to get serviced, their wait time will enhance.

To mitigate (although not essentially forestall) that state of affairs, divide the utmost helpful concurrency among the many variety of shoppers. For instance, when you’ve acquired 10 shoppers and a most helpful concurrency of 100, then every shopper ought to be capable of queue as much as 10 requests throughout all obtainable shards.

This usually works when your requests are evenly distributed. Nonetheless, it could nonetheless backfire when you might have a sure diploma of imbalance. Say all 10 shoppers determined to queue at the least one request underneath the identical shard. At a given time limit, that shard’s concurrency climbed to 10, double our initially found most concurrency. Because of this, latency will increase, and so does your P99.

There are totally different approaches to forestall that state of affairs. The suitable one to comply with depends upon your software and use case semantics. One choice is to restrict your shopper concurrency even additional to reduce its P99 influence. One other technique is to throttle on the system stage, permitting every shard to shed requests as quickly because it queues previous a sure threshold.

Step 7: Contemplate Background Operations

Cashiers don’t work at their most velocity always. Typically, they inevitably decelerate. They drink water, eat lunch, go to the restroom, and finally change shifts. That’s life!

It’s now time for real-life manufacturing testing. Apply what you’ve discovered up to now and observe how the system behaves over lengthy intervals of time. Distributed techniques typically have to run background upkeep actions (like compactions and repairs) to maintain issues operating easily.

The truth is, that’s exactly the rationale why I really useful that you just avoid the saturation space in the beginning of this text. Background duties inevitably eat system assets, and are sometimes difficult to diagnose. I generally obtain studies like “We noticed a latency enhance as a consequence of compactions,” solely to seek out out later the precise trigger was one thing else; for instance, a spike in queued requests to a given shard.

Regardless of the trigger, don’t attempt to “throttle” system duties. They exist and have to run for a purpose. Throttling their execution will doubtless backfire on you finally. Sure, background duties decelerate a given shard momentarily (that’s regular!). Your software ought to merely want different much less busy replicas (or cashiers) when it occurs.

Making use of These Steps

Hopefully, you are actually empowered to handle questions like “Why is latency excessive?” or “Why is throughput so low?”. As you begin evaluating efficiency, begin small. This minimizes prices and offers you fine-grained management throughout every step.

If latencies are sub-optimal on a small scale, it both means you’re pushing a single shard too onerous, or that your expectations are off. Don’t interact in larger-scale testing till you’re pleased with the efficiency a single shard provides you.

As soon as you are feeling comfy with the efficiency of a single shard, scale capability accordingly. Keep watch over concurrency always and be careful for imbalances, mitigating or stopping them as wanted. When you end up in a state of affairs the place throughput now not will increase however the system is idling, add extra shoppers to extend parallelism.