Immediately, I need to share the story of a small open-source venture I created only for enjoyable and experimentation — ConsoleGpt. The method, outcomes, and total expertise turned out to be fascinating, so I hope you discover this story fascinating and, maybe, even inspiring. In spite of everything, I taught my venture how one can perceive spoken instructions and do precisely what I need.

This story would possibly spark new concepts for you, encourage your personal experiments, and even encourage you to construct the same venture. And naturally, I might be thrilled should you be a part of me in creating ConsoleGpt — whether or not by contributing new options, working it domestically, or just starring it on GitHub. Anybody who’s ever labored on an open-source venture is aware of how a lot even small help means.

Sufficient with the preamble — let’s dive into the story!

The Thought

When large language models (LLMs) first emerged, my fast query was, what can we construct utilizing them? It was clear that their potential functions had been just about limitless — constrained solely by creativeness and willingness to experiment.

One night, whereas reflecting on their capabilities, I got here up with a curious concept: what if I may educate my venture to speak to me in a typical language? Think about merely describing a process to it, and it could robotically execute the required steps. That is how the concept for ConsoleGpt was born. This occurred a couple of yr and a half in the past, lengthy earlier than LLM-based brokers grew to become a scorching matter amongst builders and customers. Even now, I consider this experiment stays related and worthwhile by way of the expertise I gained whereas enjoying with it.

The Implementation

So, how do you determine communication between a programmer and their venture? There are a number of choices, however probably the most pure and intuitive one is, in fact, the command-line interface (CLI). It is a acquainted instrument for builders and excellent for low-level interplay with software program. That is once I determined my venture can be a console software.

However what precisely would this software do? The plain reply was to execute instructions or perhaps a sequence of instructions, course of their outcomes, and current them within the desired format. The idea is straightforward: the programmer writes a request in pure language, the system interprets it, determines which console instructions must be executed and in what order, and — if one command will depend on the results of one other — ensures they run sequentially, remodeling the information as wanted.

To carry this concept to life, I selected PHP. I’ve all the time appreciated its simplicity, flexibility, and the convenience of making instruments, each easy and complicated. For the console software framework, I chosen the favored symfony/console library, which presents all the pieces wanted to work with CLI instructions. This meant my venture could possibly be built-in into every other software utilizing ‘symfony/console,’ making it not simply an experiment however a probably useful gizmo for different builders.

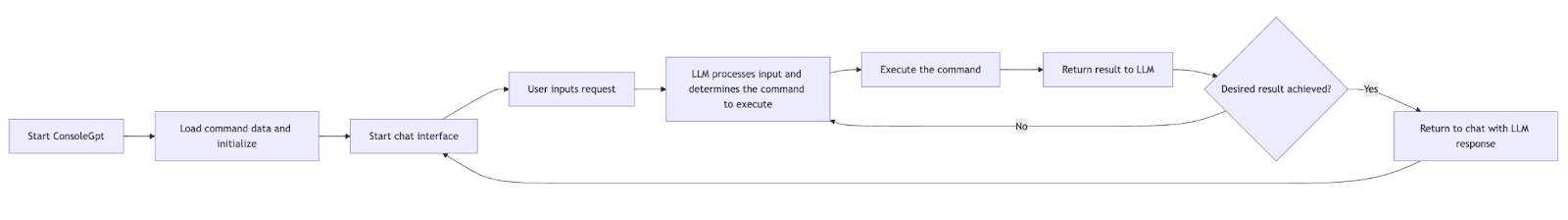

The console software serves because the interface, with instructions appearing as its constructing blocks. Here is how the method flows:

- The person enters a request in pure language.

- The system makes use of an LLM to course of the request and to find out:

- Which instructions have to be executed.

- The right order for executing the instructions.

- The best way to deal with the outcomes of every command to finish the duty.

- The console software then executes the recognized instructions, returning the outcomes to the LLM, which processes the output and presents it to the person within the required format.

LLM Alternative

As talked about earlier, I used PHP for improvement and ‘symfony/console’ as the muse for the CLI software. For the language mannequin, I opted for OpenAI’s GPT fashions (GPT-3, GPT-4, and many others.). The explanations for this had been easy and sensible:

Ease of Integration

OpenAI supplies an accessible API with excellent documentation and a sandbox for testing. Moreover, there are quite a few libraries for practically each programming language that function wrappers for the API, simplifying interplay with the mannequin.

For my venture, I used the well-maintained openai-php/client library, which had all the required performance. The mannequin is closed-source, so I need not run it domestically, spend loads of time determining the way it works, and many others. Downsides embrace: it prices cash (albeit comparatively little), it’s probably unstable (the API would possibly break or its format may change sooner or later), and probably unreliable since I rely upon a third-party service supplier over whom I’ve no management. Moreover, I needed to share information with OpenAI when interacting with their fashions. Nonetheless, these downsides weren’t essential for the duty at hand.

Excessive-High quality Fashions

On the time of improvement, OpenAI’s fashions had been among the many greatest general-purpose LLMs accessible — at the least, that’s what OpenAI and person experiences steered. My purpose wasn’t to discover a area of interest resolution or spend an excessive amount of time fine-tuning. I merely needed to shortly take a look at whether or not it was doable to construct a system that lets customers “speak” to a program, and OpenAI’s fashions had been excellent for this.

Operate Calling

Implementing my concept with out this feature would have been extraordinarily troublesome. To execute particular console instructions, I wanted the mannequin to return information in a exact format (i.e., the command title and its parameters). With out structured outputs, language fashions would possibly produce incorrect or sudden outcomes, breaking the appliance. Operate calling ensures that the output adheres to a predefined construction, logically separating user-addressed textual content from executable duties.

In my venture, every console command is represented as a perform, with arguments and choices serving as parameters. This setup permits ChatGPT to:

- Know what instructions can be found throughout a session.

- Perceive the required parameters for every command.

- Routinely decide the sequence of command executions wanted to realize the specified end result.

Along with console command features, I added a number of system features, akin to retrieving chat statistics, switching the language mannequin in use, adjusting the context window dimension, and so forth.

Undertaking Construction

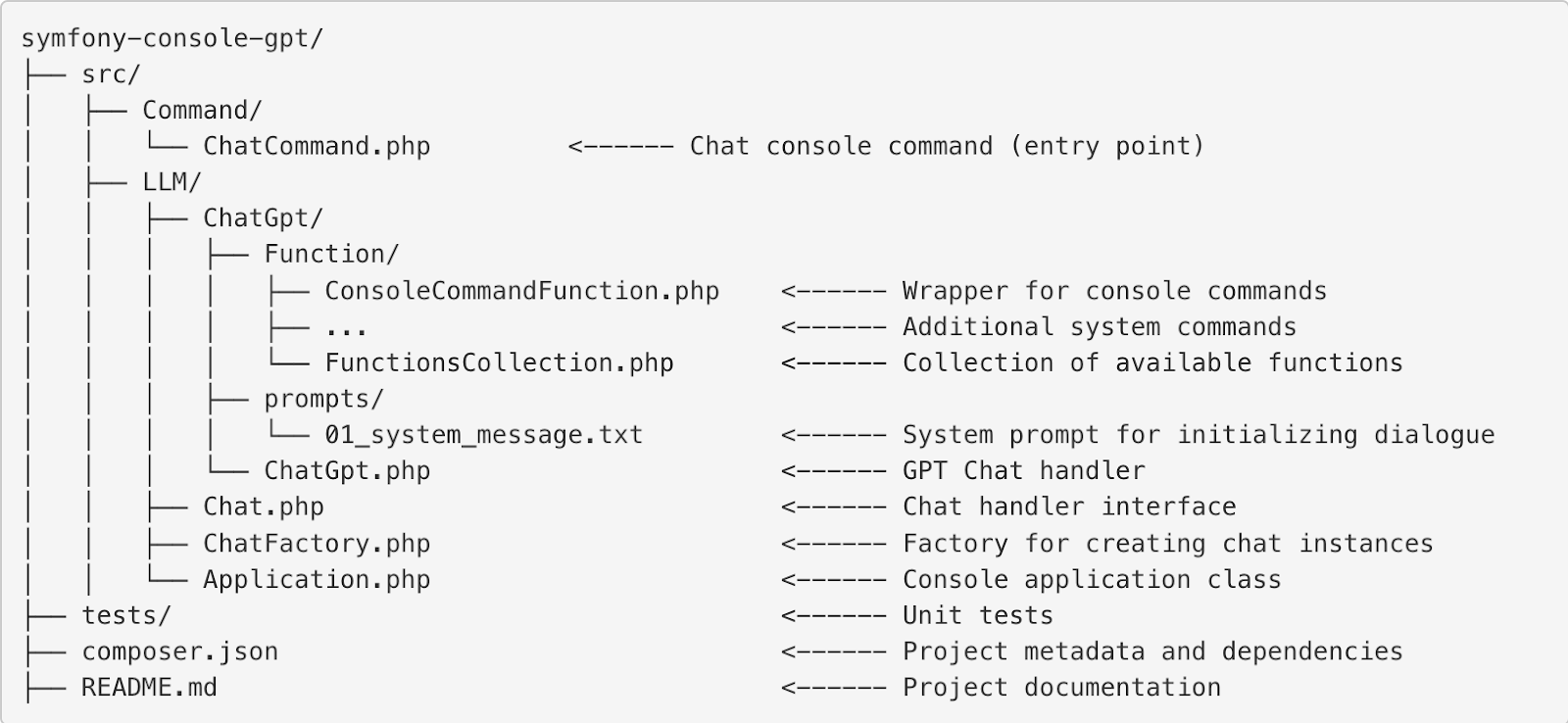

The venture’s construction is easy and modular, making it each maintainable and extensible. Here is a quick overview:

- Command listing incorporates the primary console command, ChatCommand. It serves because the entry level for interacting with the console software. This command will be built-in into any current software based mostly on symfony/console both as a standalone command or used as the primary venture command via inheritance of the bottom Software class. This resolution permits customers to simply combine ConsoleGpt performance into their tasks with out complicated configurations: it simply works out of the field.

- LLM listing is liable for all logic associated to working with language fashions. It incorporates the Chat interface, which defines chat conduct, in addition to the ChatFactory, which handles particular chats’ creation. For instance, the implementation for OpenAI fashions is positioned within the ChatGpt subdirectory, the place the core performance for interacting with the OpenAI API is concentrated.

- Capabilities for ChatGPT reside within the ChatGpt/Operate subdirectory. A key factor right here is the ConsoleCommandFunction class, which acts as a wrapper for every console command. A set of all features is saved in FunctionsCollection, which is loaded when the chat begins and is used to generate requests to the language mannequin. Moreover, this listing incorporates supplementary system instructions, akin to retrieving statistics, switching the language mannequin, and different administration duties.

- Within the ChatGpt/prompts subdirectory, there’s a file named ‘system_message.txt’, which incorporates the system immediate used for preliminary configuration of the mannequin’s conduct and setting the context for processing person requests.

The venture construction was designed with future enlargement and help for different language fashions in thoughts. Whereas the present implementation helps solely OpenAI ChatGPT, utilizing Chat interface and ChatFactory performance makes including different fashions a simple process (offered the problem of perform calls in different options is resolved). This structure ensures that the venture is adaptable to numerous wants and presents flexibility and flexibility.

Connecting the Chat to Your PHP Undertaking

To combine ConsoleGpt into your venture, make sure the library is put in by way of Composer:

composer require shcherbanich/symfony-console-gptAfter putting in the library, you possibly can combine ChatCommand into your console software utilizing one in all a number of strategies. Let’s take a look at the primary choices.

1. Including the Command Utilizing the add Technique

The best method is so as to add ChatCommand to the listing of accessible instructions utilizing the add() methodology in your console software. This feature is appropriate for shortly incorporating the command into an current console venture.

<?php

use ConsoleGptCommandChatCommand;

use SymfonyComponentConsoleApplication;

$software = new Software();

$application->add(new ChatCommand());

$application->run();2. Inheriting from the Base Software Class

If you wish to make ChatCommand the core of your console software, you possibly can inherit your software class from the bottom Software class offered by the library. It will robotically add ChatCommand to your software.

<?php

use ConsoleGptApplication as ChatApplication;

last class SomeApp extends ChatApplication

{

// Add your customized logic if wanted

}3. Utilizing DI in Symfony

In case your software is constructed with Symfony and makes use of the Dependency Injection (DI) mechanism, you possibly can register the ChatCommand in companies.yaml. It will enable Symfony to robotically acknowledge the command and make it accessible in your software.

To do that, add the next code to your companies.yaml file:

companies:

ConsoleGptCommandChatCommand:

tags:

- { title: 'console.command', command: 'app:chat' }After that, the command will probably be accessible within the console underneath the title app:chat. This method is appropriate for typical Symfony functions the place all console instructions are registered by way of the service container.

To run the appliance, you additionally have to set the atmosphere variable OPENAI_API_KEY along with your OpenAI API key. This secret’s used for authorizing requests to the mannequin’s API. You may acquire your key on the OpenAI API Keys web page. There, you may as well high up your steadiness if essential and examine utilization statistics. You may set the atmosphere variable on Linux/macOS by working the next command within the terminal:

export OPENAI_API_KEY=your_keyWhen you use Home windows, the command might want to appear to be this:

set OPENAI_API_KEY=your_keyThe Consequence

To check and debug the venture, I created a demo application with a number of instructions for managing the venture. The outcomes exceeded my expectations: the system labored precisely as supposed and generally even higher. The language mannequin interpreted most requests precisely, invoked the suitable instructions, and clarified any lacking info earlier than executing the duties in an accurate method. Here is a demo video. Probably the most spectacular facets was the system’s potential to deal with complicated command sequences, which I consider demonstrates the big potential of this method.

My experiment grew to become not solely an fascinating technical problem but additionally a place to begin for enthusiastic about new merchandise. I consider this resolution can encourage different builders to create their very own tasks — whether or not based mostly on my code or just by making use of the concepts I applied. For that reason, I selected the MIT license, which presents most freedom of use. You may make the most of this venture as a basis on your experiments, enhancements, or merchandise.

Listed below are a couple of ideas on how this method, its particular person parts, or this concept could possibly be used:

- Scientific analysis: When you’re finding out human-computer interplay or language mannequin capabilities, this venture may function a helpful base for experiments and analysis.

- Constructing interactive interfaces for console functions: This resolution transforms static console functions into dynamic and intuitive interfaces that may be skilled to execute instructions in pure language.

- Assistive know-how for individuals with disabilities: By integrating a speech-to-text mannequin, you possibly can create a handy instrument for blind or visually impaired people or for these with motor impairments. With this method, customers may probably work together with console functions utilizing voice instructions, enormously enhancing accessibility.

- Creating AI brokers: This method can be utilized as a basis for constructing absolutely purposeful AI brokers. Since console instructions can carry out a variety of duties, like launching applications, processing information, interacting with exterior APIs, and extra, this resolution will be seen as a versatile framework for establishing highly effective instruments.

Classes Discovered

This experiment offered me with each optimistic insights and a transparent view of sure limitations and potential dangers. Listed below are the important thing takeaways:

- Anybody can сreate an LLM Agent in a short time. Constructing an LLM agent is a process that any developer can accomplish in just some evenings. For instance, I created the primary working model of this venture in a single night. Regardless that the code was removed from being excellent or optimized, it already delivered spectacular outcomes. That is inspiring as a result of it exhibits that the know-how is now accessible and open for experimentation.

- People can really speak with code. This experiment confirmed that trendy language fashions will be successfully used for interacting with applications. They excel at decoding pure language queries and changing them into sequences of actions. This opens up important alternatives for automation and enhancing person expertise.

- GPT-3 performs simply in addition to GPT-4o for this process. It might sound stunning, however it’s true. Just lately, the development in mannequin high quality has grow to be much less obvious, elevating the query: why pay extra?

- Customized performance is nice however dangerous. Counting on the customized features of language fashions carries dangers. In my case, the appliance closely will depend on OpenAI’s Operate Calling function. If the corporate decides to cost additional for its use, adjustments its conduct, or removes it completely, the appliance will cease working. This highlights the inherent dependency on third-party instruments when utilizing exterior companies.

- OpenAI’s API optimization will increase prices for customers. I seen that OpenAI’s API is designed in a approach that drives up person prices. For instance, features are billed at customary charges regardless of requiring extreme textual content transmission. Just lately, OpenAI launched a brand new function known as ‘instruments’ that wraps features however requires much more information to be transmitted for performance to work. This will increase the context dimension, which, in flip, raises the price of every request. This difficulty is very noticeable when executing complicated sequences of console instructions, the place the context grows with every step, making requests progressively dearer.

Closing Ideas

Regardless of the recognized downsides and dangers, I’m happy with the outcomes of this experiment. It demonstrated that even minimal effort can yield spectacular outcomes, and applied sciences like language fashions are genuinely remodeling how software program is developed. Nonetheless, this experiment additionally served as a reminder of how vital planning is and the way cautious we must be when accounting for potential limitations. That is very true when contemplating such options for industrial or long-term tasks.

Simply to ensure, here’s a hyperlink to the venture’s homepage on GitHub. Be happy to borrow, commit, and focus on it with me and the group!