Maintaining with the most recent analysis is a crucial a part of the job for many knowledge scientists. Confronted with this problem myself, I usually struggled to keep up a constant behavior of studying tutorial papers and questioned if I may design a system that will decrease the barrier to exploring new analysis making it simpler to have interaction with developments in my subject with out the necessity for in depth time commitments. Given my lengthy commute to work and an innate lack of motivation to carry out weekend chores, an audio playlist that I may take heed to whereas doing each appeared like the apparent possibility.

This led me to construct Scholcast, a easy Python package deal that creates detailed audio summaries of educational papers. Whereas I had beforehand constructed variations utilizing language fashions, the current developments in expanded context lengths for Transformers and improved vocalization lastly aligned with all my necessities.

To construct Scholcast, I primarily used OpenAI’s GPT GPT-4o-mini. Nonetheless, since I’m utilizing the Langchain API to work together with the fashions, the system is versatile sufficient to accommodate different fashions like Claude (via AWS Bedrock) or domestically hosted LLMs (equivalent to Ollama).

The important thing parts of this package deal had been the next.

Changing PDF to LaTeX

Step one was to transform tutorial papers again to their unique LaTeX format. I initially experimented with open-source packages like PyPDF2, however these instruments struggled with advanced tutorial content material, significantly papers containing mathematical notation and particular symbols. To beat these limitations, I opted for the Mathpix API, which gives superior PDF to LaTeX conversion capabilities. Directions for getting the Mathpix API key could be discovered of their documentation here.

As of the date of writing this text (Nov 29, 2024), I used to be unable to make use of OpenAI’s API to transform PDF to Latex with excessive constancy. I’ll create a Push Request if that modifications.

Abstract Technology and Understanding

That is the core element of the device, liable for producing complete paper summaries. The important thing problem was figuring out the suitable depth of understanding. Whereas ideally, we’d need an understanding equal to an in depth studying of the paper, producing such in depth protection in audio format, particularly for mathematical ideas, proved difficult.

Preliminary experiments used customary prompts like:

"Present a transparent and concise rationalization of the analysis paper {academic_paper}.

Embody the principle analysis query, the methodology used, key findings, and

the implications of the examine" These prompts generated superficial summaries. As an example, when utilized to the seminal paper “Consideration Is All You Want,” it produced the next rationalization:

As you’ll be able to observe, whereas the reason mentions ideas like Self-Consideration and Multi-Head Consideration, it fails to cowl these matters in any quantity of depth. The idea of Positional Encodings can be not talked about on this model. It’s clear that the LLM is both glossing over or skipping complete ideas on this rationalization.

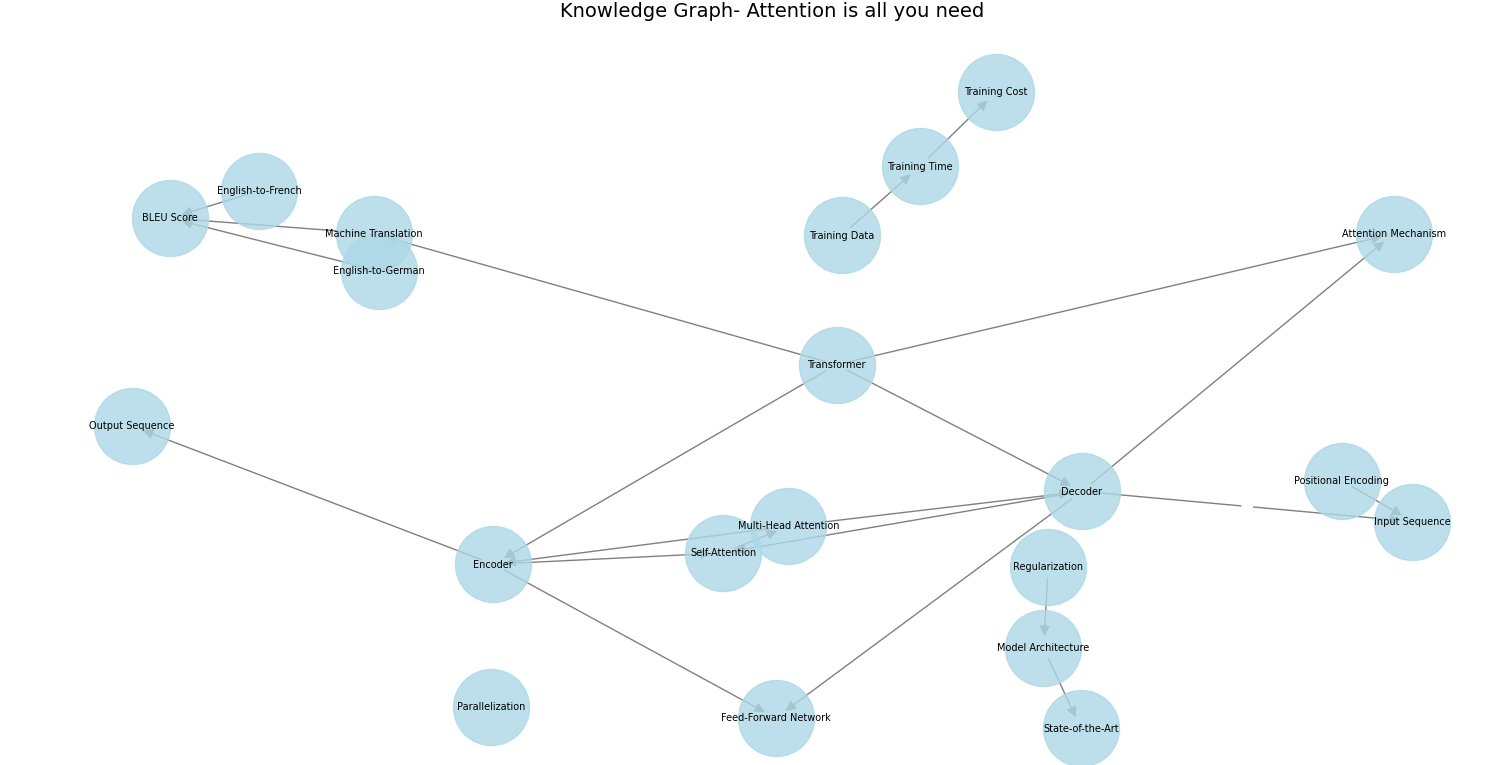

To deal with this limitation, I developed a multi-step strategy. First, I prompted the LLM to create a information graph of the paper’s key ideas, with edges representing their relationships.

Analyze the next {tutorial paper} and create a information graph.

Record the principle ideas as nodes and their relationships as edges.

Format your response as an inventory of nodes adopted by an inventory of edges:

Nodes:

1. Concept1

2. Concept2

...

Edges:

1. Concept1 -> Concept2: Relationship

2. Concept2 -> Concept3: Relationship

...

It generated the next graph for the paper.

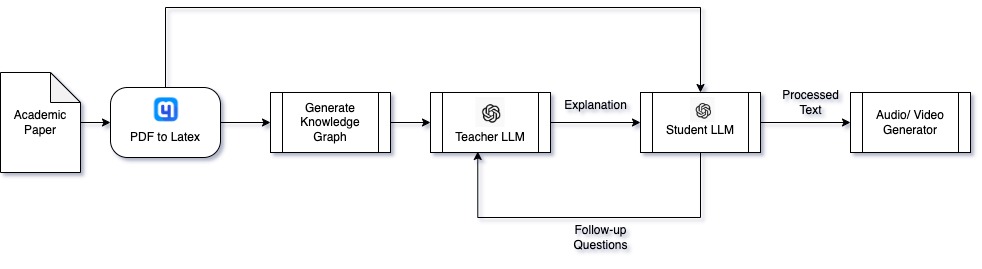

This graph then served as a roadmap for the Trainer LLM to elucidate the paper, leading to a notably improved depth.

To additional improve the summaries, I launched a Scholar LLM that reads the paper together with the primary set of explanations from the Trainer and asks clarifying inquiries to the Trainer LLM.

This interplay led to extra detailed explanations of advanced ideas.

As you’ll be able to see, ideas had been lined in rather more element together with pretty attention-grabbing follow-up questions from the Scholar LLM.

Changing That Paper Into Audio/Video Format

This element transforms the generated abstract into an audio or video format. For audio conversion, I utilized OpenAI’s text-to-speech tts-1-hd mannequin, using “nova” and “echo” voices to tell apart between the Trainer and Scholar roles, respectively. This strategy provides selection and construction to the audio presentation.

For video creation, I opted for a easy but efficient methodology of mixing a single static picture with the audio monitor utilizing the pydub and moviepy package deal. This system leads to a fundamental however purposeful video format that enhances the audio content material.

Under is the schematic for the end-to-end workflow:

Whereas not equal to an in-depth examine, the ultimate output offers complete protection that successfully serves as an alternative choice to an preliminary read-through.

Conclusion

You will discover the supply code for Scholcast here and discuss with this README for directions on find out how to set up and use Scholcast. Additionally, you’ll be able to take a look at the Scholcast YouTube channel for summaries of a bunch of attention-grabbing papers on matters starting from LLMs to optimization and ML algorithms.