In the previous couple of months, I’ve spoken with a number of trade professionals like software program engineers, consultants, senior managers, scrum masters, and even IT assist employees about how they use generative AI (GenAI) and what they perceive about Synthetic Intelligence. Lots of them imagine that utilizing “AI” means interacting with functions like ChatGPT and Claude or counting on their built-in functions like Microsoft Copilot. Whereas these are glorious instruments to your day-to-day actions, they do not essentially train you how one can construct a GenAI utility from the bottom up. Understanding these technicalities is essential to brainstorming concepts and creating use instances to resolve and automate your work.

There are millions of tutorials on giant language fashions (LLMs), RAG (retrieval-augmented technology), and embeddings; many nonetheless go away novice AI fanatics confused in regards to the “why” behind every step.

This text is for these novices who desire a easy, step-by-step method to constructing their very own customized GenAI app. We’ll illustrate every part with an instance that makes use of two Sony LED TV person manuals. By the tip, you’ll perceive:

- Easy methods to arrange your knowledge (why chunking is essential).

- What embeddings are, and how one can generate and retailer them.

- What RAG is, and the way it makes your solutions extra factual.

- Easy methods to put all of it collectively in a user-friendly approach.

Why Construct a GenAI Chatbot for Sony TV Manuals?

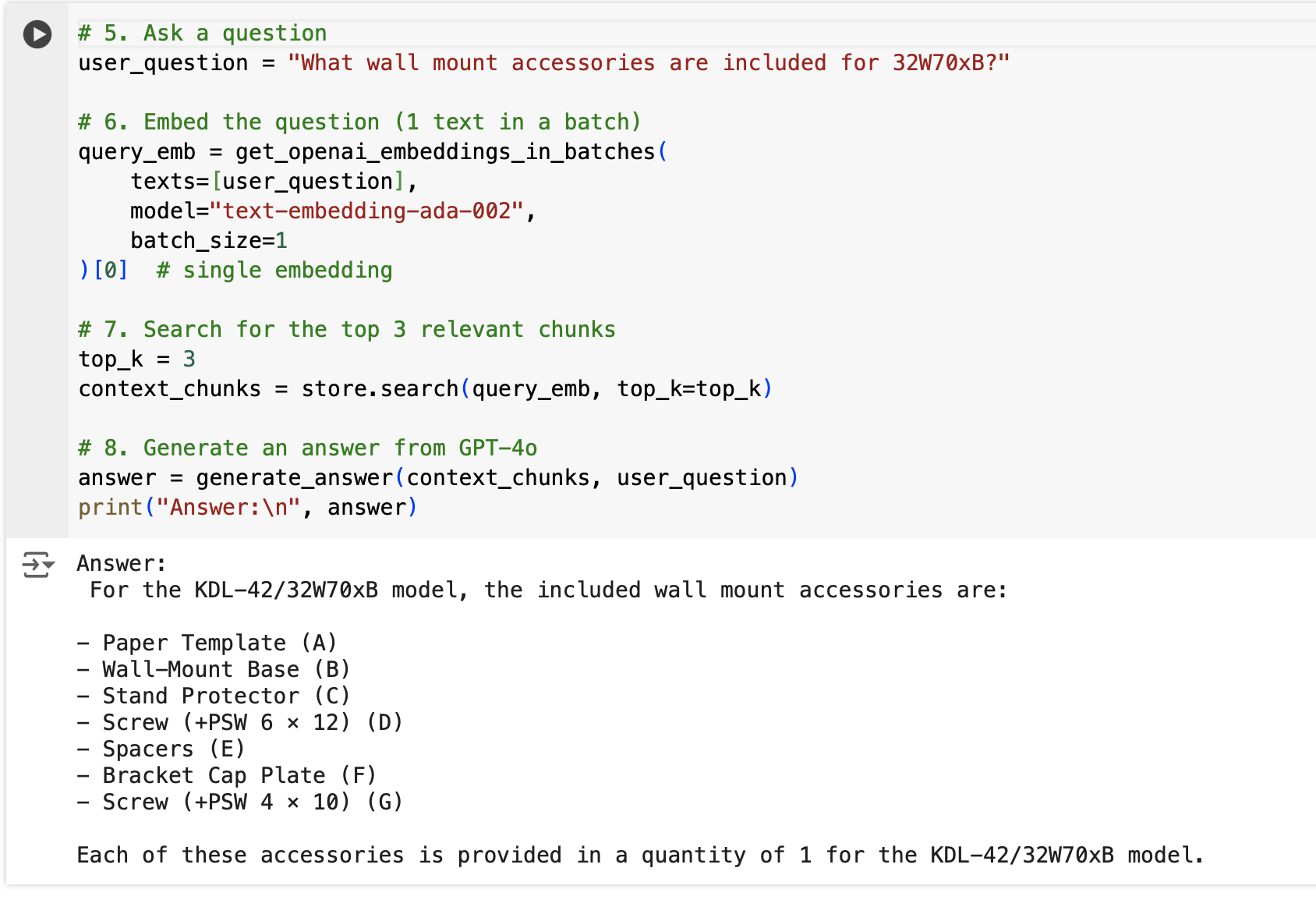

From an trade standpoint, think about your organization has a buyer assist division, and you’ve got 100 completely different merchandise with a 50-page person handbook for every of them. As an alternative of studying by means of a 5000-page PDF, a person can ask, “What wall mount equipment are included for 32W70xB?” and get a focused reply.

A GenAI utility on this situation can dramatically cut back buyer assist overhead and allow your representatives to appropriate solutions shortly. Functions like ChatGPT or Claude may give you a generic reply, however this utility might be particular to your product line. Whether or not you’re a assist engineer, a tech author, or a curious developer, this method makes documentation extra accessible, accelerates troubleshooting, and enhances buyer satisfaction.

Conceptual Overview

1. Immediate Engineering in Plain English

Prompt engineering is the artwork of telling the mannequin precisely what you need and how you need it. Consider it as crafting a “job description” to your AI assistant. The extra context you present (e.g., “Use these handbook excerpts” or “Use this context”), the higher and extra on-topic the AI’s responses might be.

2. RAG (Retrieval-Augmented Era) and Why It Issues

Retrieval-augmented generation (RAG) ensures your solutions stay grounded in info out of your knowledge supply (e.g., the Sony manuals). With out RAG, a mannequin would possibly “hallucinate” or produce outdated data. As we mentioned earlier than, you had 100 merchandise and 50-page manuals for every; now, think about you added 50 extra merchandise. Your handbook dimension elevated from 5000 pages to 7500. In the event you use RAG, it should dynamically fetch the related doc chunks earlier than producing the reply, making your utility each versatile and correct.

3. Vector Embeddings 101

Phrases could be changed into numerical vectors that seize semantic that means. So if somebody asks, “Which screws usually are not offered?” the mannequin can discover related textual content about “not provided” even when the precise key phrases aren’t used. This method is essential for constructing user-friendly, intuitive search and Q&A experiences.

Venture Setup

Under is a step-by-step information on constructing a GenAI utility that may reference the contents of two Sony LED TV person manuals, all utilizing Google Colab. We’ll cowl why Google Colab is a superb atmosphere for speedy prototyping, how one can set it up, the place to obtain the PDF manuals, and how one can generate embeddings and run queries utilizing the OpenAI API and FAISS. This information is particularly for novices who wish to perceive why every step issues fairly than simply copy-pasting code.

1. Why Google Colab?

Google Colab is a free, cloud-based Jupyter pocket book atmosphere that makes it straightforward to:

- Bootstrap your atmosphere: Preconfigured with Python, so that you don’t have to put in Python domestically.

- Set up dependencies shortly: Use

!pip set up ...instructions to get the libraries you want. - Leverage GPU/TPU (non-compulsory): For bigger fashions or heavy computations, you possibly can choose {hardware} accelerators.

- Share notebooks: You possibly can simply share a single hyperlink with friends to show your GenAI setup.

In brief, Colab handles the overhead so you possibly can deal with writing and working your code.

2. What Are We Constructing?

We’re going to construct a small question-answering (QA) system that makes use of RAG to reply queries based mostly on the contents of two Sony LED TV manuals:

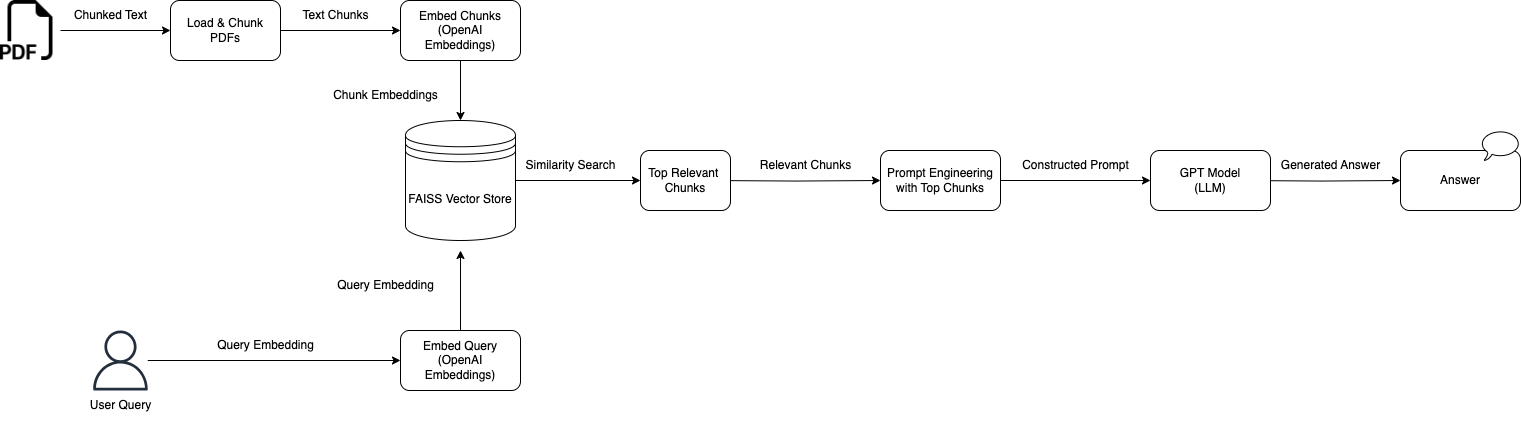

Right here’s the essential workflow:

- Break up and skim PDFs into textual content chunks.

- Embed these chunks utilizing OpenAI’s embeddings endpoint.

- Retailer embeddings in a FAISS vector index for semantic search.

- When a person asks a query:

- Convert the query into an embedding.

- Search FAISS for probably the most related chunks.

- Cross these chunks + the person query to an LLM (like GPT-4) to generate a tailor-made reply.

This method is RAG as a result of the language mannequin is “augmented” with extra information out of your knowledge, guaranteeing it stays factual to your particular area.

3. Concerning the OpenAI API Key

To make use of OpenAI’s embeddings and chat completion providers, you’ll want an OpenAI API key. This key uniquely identifies you and grants you entry to OpenAI’s fashions.

Easy methods to get it:

- Join (or log in) at OpenAI’s Platform.

- Go to your account dashboard/settings and discover the “API Keys” part.

- Create a brand new secret key.

- Copy and put it aside; you’ll use it in your code to authenticate requests.

Structure

The diagram above outlines a RAG pipeline for answering questions from Sony TV manuals. We:

- Load and chunk PDFs

- Embed chunks utilizing OpenAI’s embeddings

- Retailer them in a FAISS index

- Embed the person question

- Search FAISS for the top-matching chunks

- Assemble a immediate and move it to GPT

- Generate a context-aware reply

By combining textual content retrieval with a robust LLM, which, in our case, we’ll use OpenAI’s GPT 4o. One of many key benefits of this RAG structure is that it augments the language mannequin with domain-specific, retrieved context from PDFs, considerably lowering hallucinations and enhancing factual accuracy.

By breaking down the method into these discrete steps — from chunking PDFs to embedding, to looking out in FAISS, to establishing a immediate, and at last producing a response — we allow an efficient and scalable Q/An answer that’s straightforward to replace with new manuals or extra paperwork.

Code and Step-by-Step Information

For the sake of brevity, we’ll transfer to Google Colab and undergo these steps one after the other.

By the tip of the tutorial, you will see how this utility was capable of reply a really particular query associated to the PDFs:

Actual-World Insights: Pace, Token Limits, and Extra

- Startup time: Producing embeddings every time could be actually sluggish. Caching or precomputing them on startup will considerably speed up your response time.

- Parallelization: For bigger corpora, contemplate multiprocessing or batch requests to hurry up embedding technology.

- Token limits: It’s good to regulate how giant your mixed textual content chunks and person queries are. Take into account organising some limits whereas creating your utility.

Conclusion

For all of the novice builders or tech fanatics on the market: studying to construct your personal AI-driven utility is immensely empowering. As an alternative of being restricted to ChatGPT, Claude, or Microsoft Copilot, you possibly can craft an AI answer that’s tailor-made to your area, your knowledge, and your customers’ wants.

By combining immediate engineering, RAG, and vector embeddings, you’re not simply following a development, you’re fixing actual issues, saving actual time, and delivering direct worth to anybody who wants fast, factual solutions. That’s the place the true impression of GenAI lies.